Seedance 2.0 Review: Stress-Testing Character Consistency Across 5 Extreme AI Video Scenarios

- AI Video

- Text-to-Video

- Image-to-Video

- seedance 2.0

AI video generation today often feels like playing the lottery. You burn $20 in credits just to get a 5-second clip where hands don’t melt and physics barely hold together.

While the industry debates Kling 3.0’s shot composition or Sora 2’s lighting realism, directors working on real narrative content know the true bottleneck isn’t lighting or camera movement — it’s Character Consistency.

Seedance 2.0 recently launched a low-key update claiming to solve multi-angle continuity, smooth transitions, and consistent characters. To see whether it lives up to the hype, I ran five extreme stress tests, using the same prompts across Seedance 2.0 and Kling 3.0.

This is what I found.

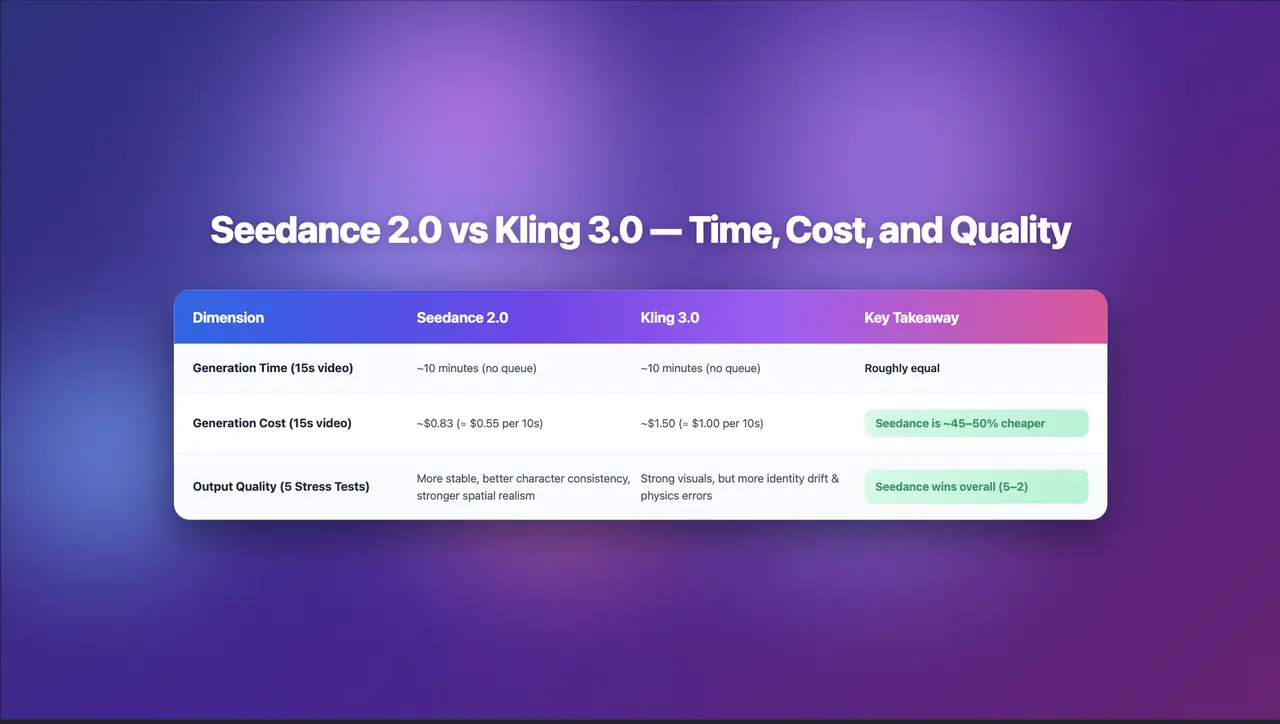

Quick Verdict: Seedance 2.0 vs Kling 3.0

Before diving into the full review, here’s a snapshot of how Seedance 2.0 and Kling 3.0 performed across five extreme AI video test scenarios, plus cost and use-case considerations.

| Dimension | Seedance 2.0 | Kling 3.0 | Key Takeaway |

| Generation Time (15s video) | ~10 minutes (no queue) | ~10 minutes (no queue) | Roughly equal |

| Generation Cost (15s video) | ~$0.83 (≈ $0.55 per 10s) | ~$1.50 (≈ $1.00 per 10s) | Seedance is ~45–50% cheaper |

| Output Quality (5 Stress Tests) | More stable, better character consistency, stronger spatial realism | Strong visuals, but more identity drift & physics errors | Seedance wins overall (5–2) |

TL;DR

Seedance 2.0 outperformed Kling 3.0 in character consistency, spatial logic, and multi-subject stability, winning 5 rounds to 2 overall. It feels less like a demo toy and more like a production-ready digital crew member — and it costs nearly half as much.

Test 1 — Mona Lisa Drinking Coca-Cola

(Facial Micro-Expressions + Spatial Interaction)

Prompt concept:

The Mona Lisa glances around nervously, reaches outside the painting to drink a Coke, reacts with satisfaction, then quickly puts it back as footsteps approach. A cowboy later grabs the Coke.

Created with Seedance 2.0

Created with Kling 3.0

In basic quality testing, SeedDance 2.0 delivered an impressive performance. It not only captured natural micro-expressions but also exhibited extremely solid physical interactions.

•Technical highlight: Spatial Scaling. SeedDance handles the size relationship between characters and objects very well, avoiding the "perspective collapse" common in AI videos.

•Comparison: Although Keling 3.0 has excellent dynamics, it occasionally has issues with the proportion between humans and machines.

Winner: Seedance 2.0

Test 2 — Eating Noodles

(Fluid Simulation + Hand-Object Coordination)

“Eating noodles” has long been a nightmare scenario for AI video — fluid motion, utensil handling, and mouth coordination push models into the uncanny valley.

Created with Seedance 2.0

Created with Kling 3.0

Both Seedance 2.0 and Kling 3.0 performed impressively here, showing strong fluid and soft-body simulation.

However, Kling produced a noticeable physics error near the end: chopsticks clipped through objects, breaking immersion.

Seedance maintained stronger physical logic and spatial continuity.

Winner: Seedance 2.0

Test 3 — Cinematic Escape Scene

(Camera Language + Directorial Interpretation)

Prompt concept:

A black-clad man flees through a street, pursued by a crowd, knocks over a fruit stand, chaos and shouting erupt.

Created with Seedance 2.0

Created with Kling 3.0

Both models produce visuals with a heavy "cinematic flair." However, there’s a distinct difference in their "DNA." Without me specifying any camera movements:

- Seedance 2.0 automatically generated dynamic shot transitions — even without explicit storyboard instructions.

- Kling 3.0 followed the prompt more literally, prioritizing instruction fidelity over creative interpretation.

- This becomes a philosophical question:

Do you want AI creative autonomy, or strict prompt obedience? - Both approaches have value.

- Result: Tie

Test 4 — Five-Member Girl Band Performance

(Multi-Character Consistency + Identity Locking)

This was the most punishing test — five distinct band members, multiple camera angles, synchronized performance, and consistent identity across shots.

Created with Seedance 2.0

Created with Kling 3.0

Seedance 2.0 exceeded expectations. It:

- Followed the prompt accurately

- Preserved distinct facial and stylistic traits

- Maintained stable character identity across multiple shots and angles

- Flip the script to Kling, and we start seeing some cracks. In multi-character setups, Kling struggled with noticeable artifacts and identity drift. For production-level stability, SeedDance wins this round hands down.

- Winner: Seedance 2.0

Test 5 — 3D Snow Battle Fight Scene

(High-Speed Action + Multi-Angle Combat)

Fight choreography is the ultimate AI video stress test — fast motion, frequent physical contact, camera switching, and spatial logic.

Created with Seedance 2.0

Created with Kling 3.0

For the longest time, high-frequency physical contact was the breaking point for AI, but we’re finally seeing a breakthrough. Both models nailed the character consistency during high-speed motion, multi-angle cinematography, and silky-smooth transitions.

Each model leaned into a different aesthetic:

- Seedance: grounded and realistic

- Keling: stylized and fluid

- This comes down to personal taste, not technical superiority.

- Result: Tie

Final Score After 5 Stress Tests

Seedance 2.0 — 5Kling 3.0 — 2

Seedance wins decisively — especially in:

- Character consistency

- Spatial realism

- Multi-subject stability

- Production reliability

- While their artistic styles differ, one thing is certain: these are no longer just "AI toys."

They now feel like real creative collaborators — digital crew members capable of supporting professional production pipelines.

Cost Comparison — The Real Production Dealbreaker

For creators, quality isn’t the only factor — budget matters.

- Kling 3.0: $1.00 per 10-second clip.

- SeedDance 2.0: $0.55 per 10-second clip.

- Seedance effectively cuts generation costs in half.

- Even though both models require ~10 minutes per generation, that 50% cost reduction can decide whether a large-scale storyboard project is profitable — or financially impossible.

Final Thoughts

Seedance 2.0 impressed me more than expected.

Its character consistency, multi-angle continuity, spatial realism, and cost efficiency make it one of the most practical AI video tools currently available.

It’s not just generating clips — it’s starting to understand directorial intent.

And what I tested today barely scratches the surface. Seedance’s real potential likely extends into more advanced — and even unconventional — creative workflows.

Platform Availability — Coming Soon to Dreamface

Seedance 2.0 is set to launch soon on the Dreamface platform.

If you’re tired of uncontrollable AI randomness and want a tool that feels more director-friendly and production-ready, it’s worth testing firsthand.

AI Translate for Global Video Creation: From One Video to Many Languages

AI Translate enables creators to turn a single video into multiple localized versions using automated translation, voice generation, and lip-sync technology. By supporting multiple languages and preserving visual consistency, AI translation helps creators and teams scale video content globally without increasing production complexity.

By Levi 一 Feb 10, 2026- AI Video

- AI Translator

- AI Video Translator

What Is AI Video Translation and How It Works

AI video translation is the process of converting spoken content in a video into another language using artificial intelligence. It typically includes speech recognition, language translation, AI-generated voice output, lip-sync alignment, and optional subtitle creation. AI video translation allows creators to localize videos efficiently without re-recording or manual editing, making global video distribution faster and more scalable.

By Levi 一 Feb 10, 2026- AI Translator

- AI Video Translator

- AI Video

How to Create Winter Olympics–Style Videos with AI

AI text-to-video and image-to-video tools make it possible to create Winter Olympics–style videos without filming or editing. Using written prompts or still images, creators can generate cinematic winter sports motion, snow effects, and athlete-inspired sequences related to the 2026 Winter Olympics. AI video generation is increasingly used for short-form content, concept trailers, and sports-themed storytelling.

By Levi 一 Feb 10, 2026- Text-to-Video

- AI Video

- Image-to-Video

- X

- Youtube

- Discord