LTX-2 Explained: Evaluating an Open-Source AI Video Foundation Model in 2026

- AI Video

- Text-to-Video

- Image-to-Video

- LTX-2

The release of LTX-2 marks an important milestone in the evolution of AI video generation. As one of the first open-source video foundation models designed for high-quality, synchronized audio-video output, LTX-2 has drawn significant attention from developers, researchers, and AI video practitioners.

This article provides a practical evaluation of LTX-2—what it is, what it enables, who it is best suited for, and how its capabilities translate into real-world video creation workflows.

What Is LTX-2?

LTX-2 is an open-source AI video foundation model released by Lightricks, a company known for professional creative tools. Unlike closed commercial video generators, LTX-2 is designed as a research-grade yet production-oriented model, emphasizing transparency, extensibility, and performance.

At its core, LTX-2 is built to generate high-quality video with synchronized audio, addressing one of the most complex challenges in AI video systems: aligning visual motion, timing, and sound in a coherent output.

Core Capabilities of LTX-2

Open-Source Video Foundation Model

LTX-2 is released as an open-source model, allowing developers to inspect, modify, and extend its architecture. This openness makes it particularly attractive for teams building custom pipelines or experimenting with new AI video techniques.

Synchronized Audio–Video Generation

A defining feature of LTX-2 is its ability to generate video and audio together, rather than treating audio as a post-processing layer. This approach improves temporal consistency and reduces the mismatch often seen in AI-generated video outputs.

High-Resolution and High-Frame-Rate Output

LTX-2 supports high-resolution video generation, including 4K output, along with higher frame rates suitable for cinematic or professional applications. This positions it closer to production use cases than many earlier experimental models.

Multi-Modal Input Support

The model is designed to work with multiple input types, including:

- Text prompts

- Image or visual references

- Audio guidance

This multi-modal design gives creators and developers more control over structure, style, and motion.

Efficiency and Local Deployment

Despite its advanced output capabilities, LTX-2 is optimized to run efficiently on modern GPUs, making local deployment feasible for teams with appropriate hardware. This lowers dependency on closed, cloud-only APIs.

Who Is LTX-2 Best Suited For?

LTX-2 is a powerful model, but it is not designed for everyone.

Well suited for:

- AI researchers exploring video generation

- Developers building custom video pipelines

- Creative technology teams experimenting with new formats

- Studios with in-house technical resources

Less suited for:

- Creators who want instant, no-setup video output

- Users without access to GPU resources

- Teams seeking ready-made templates or simplified workflows

In short, LTX-2 excels as a model-level innovation, but requires technical expertise to unlock its full potential.

From Model Innovation to Practical Creation

Models like LTX-2 represent major progress at the infrastructure and research layer of AI video. However, most creators ultimately care less about model architecture and more about questions such as:

- How quickly can I generate usable videos?

- Can I switch between different generation styles easily?

- Do I need to manage deployment, inference, and hardware?

This is where the distinction between models and creation platforms becomes critical.

Using AI Video Capabilities in Practice with DreamFace

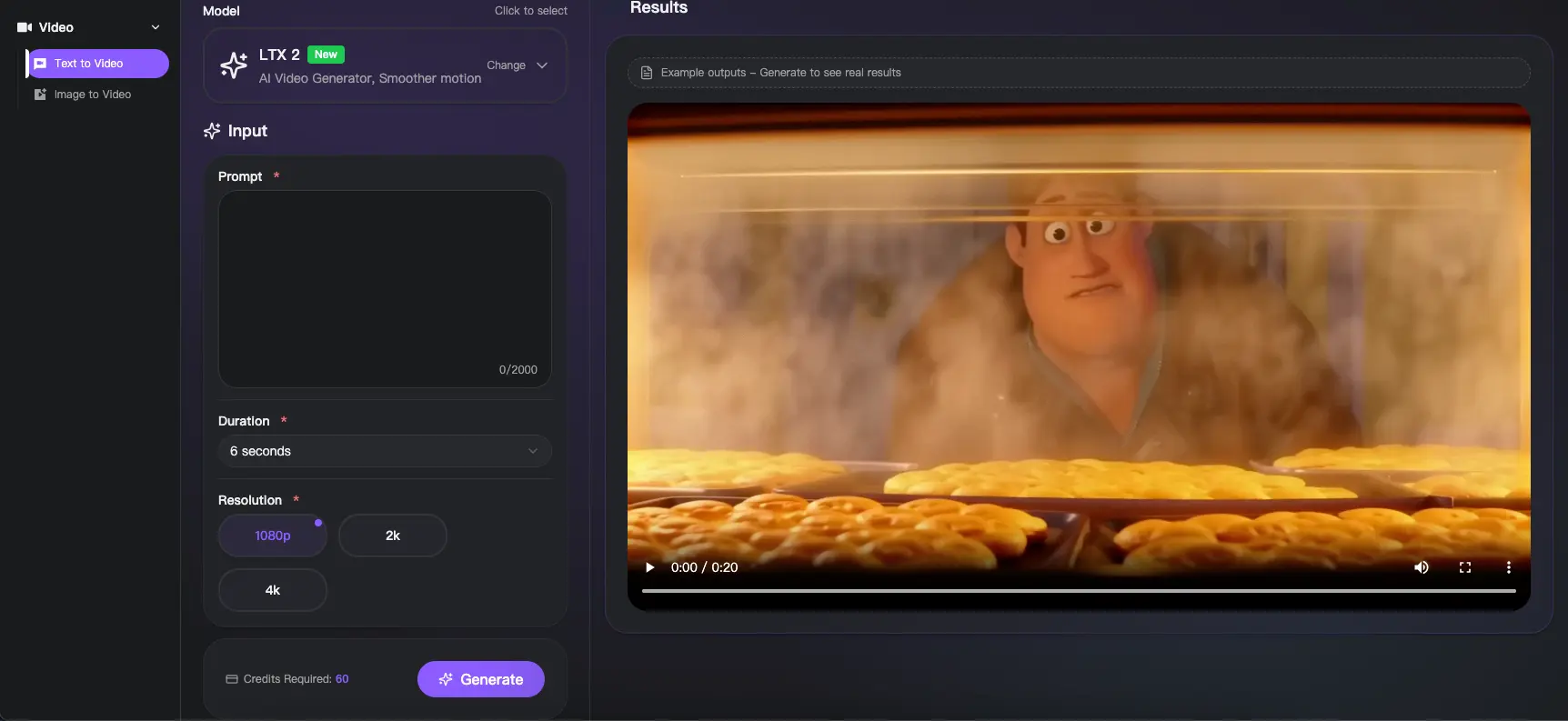

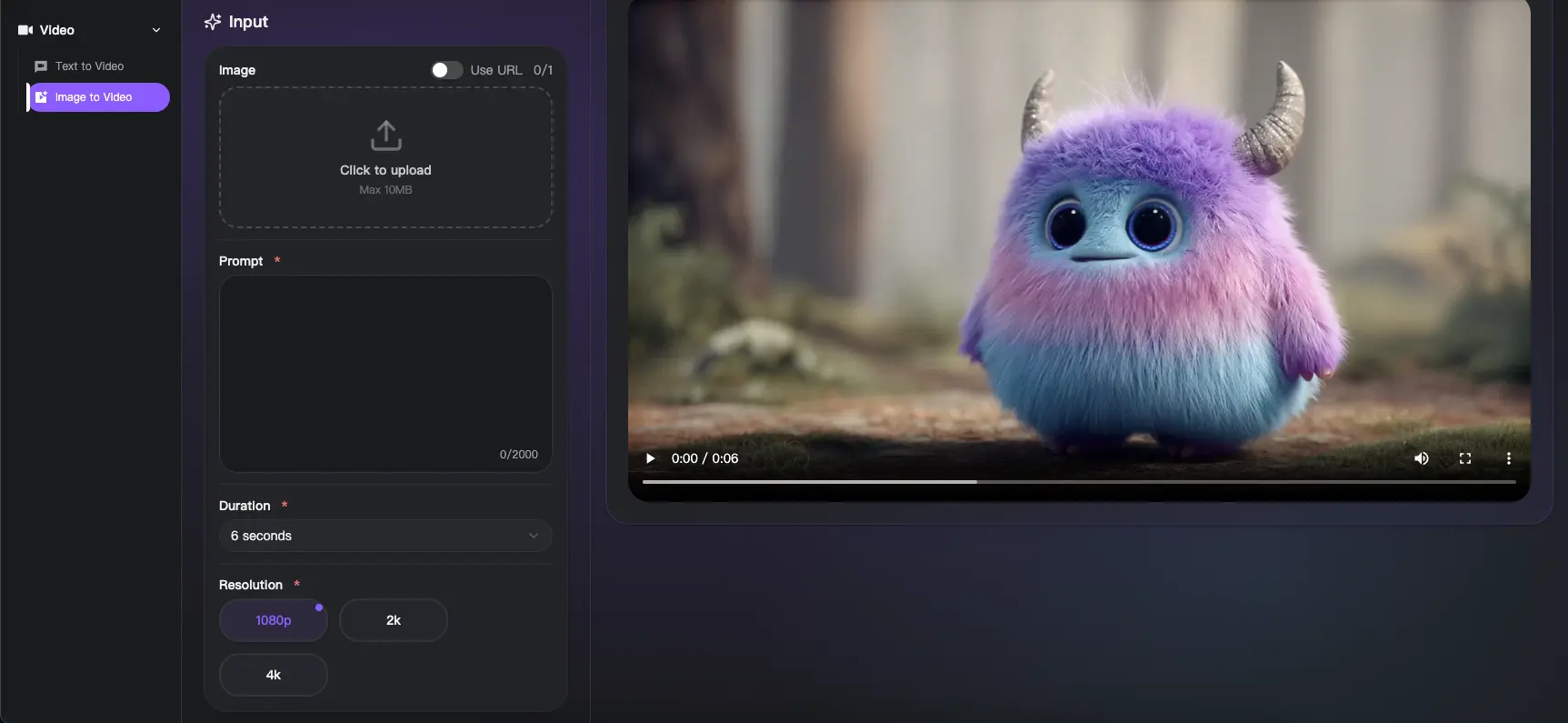

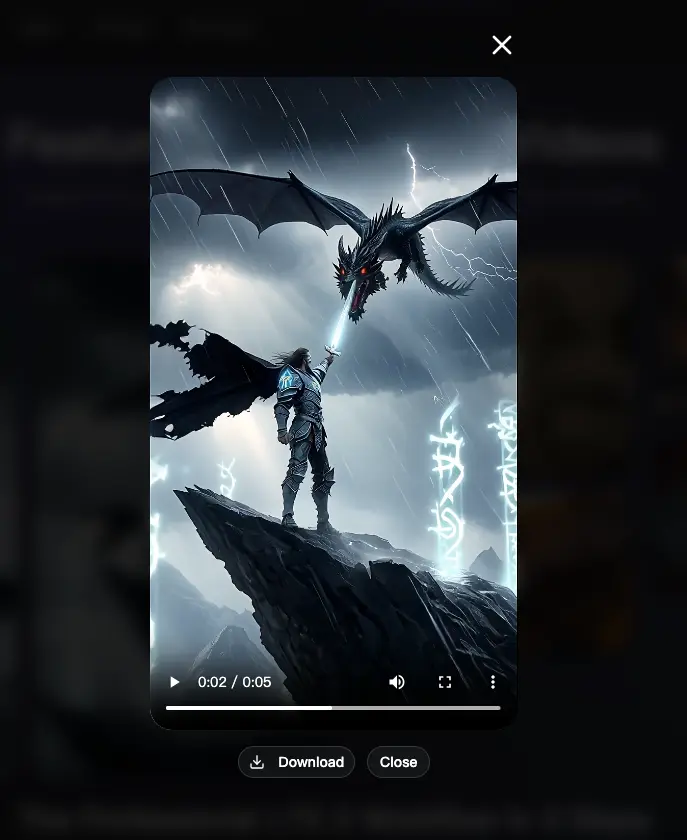

For creators who are inspired by the capabilities demonstrated by models like LTX-2 but want a practical, ready-to-use workflow, platforms that aggregate multiple AI video models play an important role. DreamFace provides access to several AI video generation models within a single interface, allowing creators to experiment with different approaches without handling model deployment directly. Available options include:

- Dream Video 1.0 & 1.5 – template-based video generation with support for start and end frames

- Seedance 1.5 Pro – optimized for expressive motion and precise audio-video synchronization

- Google Veo Fast series – designed for faster generation and rapid iteration

- Vidu Q2 – reference-based video generation focused on character consistency

Rather than replacing open-source models like LTX-2, DreamFace operates at the application layer, translating advanced AI video capabilities into workflows that creators can use immediately.

Key Takeaways

- LTX-2 represents a significant step forward for open-source AI video generation, particularly in synchronized audio-video output and high-resolution performance.

- It is best suited for developers and teams with the technical capacity to integrate and customize AI models.

- For creators who want to apply similar AI video capabilities without managing infrastructure, platform-level solutions provide a more accessible entry point.

- DreamFace serves as one such entry point, enabling creators to explore diverse AI video models through a unified creation workflow.

Final Thoughts

LTX-2 highlights where AI video technology is heading: more open, more powerful, and closer to production-ready quality. As the ecosystem evolves, the combination of open-source model innovation and creator-focused platforms will play a key role in shaping how AI video is adopted at scale.

For teams evaluating the future of AI video creation, understanding both layers—the model and the platform—is essential.

How to Create a Baby Angel AI Singing Video

A baby angel AI singing video is created by animating a photo with AI-powered lip sync and gentle singing audio. To create this type of video, users upload a clear image, add a soft vocal track, and generate facial animation that matches pitch and rhythm. Angel-themed AI singing videos are popular on platforms like Facebook Reels because their calm, emotional visuals encourage replays and sharing.

By Jayden 一 Jan 10, 2026- Photo Animation

- AI Video

- Avatar Video

How to Create a Viral AI Singing Avatar Video

An AI singing avatar video is created by animating a photo with AI-powered lip sync and singing voice generation. To create a viral AI singing avatar video, users upload a clear image, add a singing audio source, and generate facial animation that matches pitch and rhythm. This type of content has become especially popular on social platforms like Facebook Reels, where expressive and unexpected visuals drive engagement.

By Jayden 一 Jan 10, 2026- Photo Animation

- AI Video

- Avatar Video

How to Create an AI Avatar and Clone Myself

Creating an AI avatar and cloning yourself means generating a digital version of your face and voice that can speak and appear in videos using AI. To create an AI avatar, users typically upload a clear photo, add text or a short voice sample, and generate a talking avatar with synchronized lip movement and facial expressions. In 2026, AI avatars are widely used for content creation, personal branding, and social media — similar to how games like NBA 2K allow players to scan their faces and create digital identities.

By Jayden 一 Jan 10, 2026- AI Video

- Avatar Video

- NBA

- Image-to-Video

- X

- Youtube

- Discord